Overview and Problem

The widespread use of social media has led to a concerning rise in cyberbullying, particularly affecting the well-being of younger users. Cyberbullying takes various forms, including online harassment, offensive content dissemination, and targeted intimidation. While existing cyberbullying detection systems have made significant strides, they often lack a holistic approach. Many focus solely on text analysis, neglecting the visual and behavioral aspects of cyberbullying. The proposed system aims to bridge these gaps by integrating advanced techniques in text, image, and user behavior analysis. Additionally, the real-time monitoring and preventive measures incorporated in the proposed system set it apart from traditional approaches.

Solution

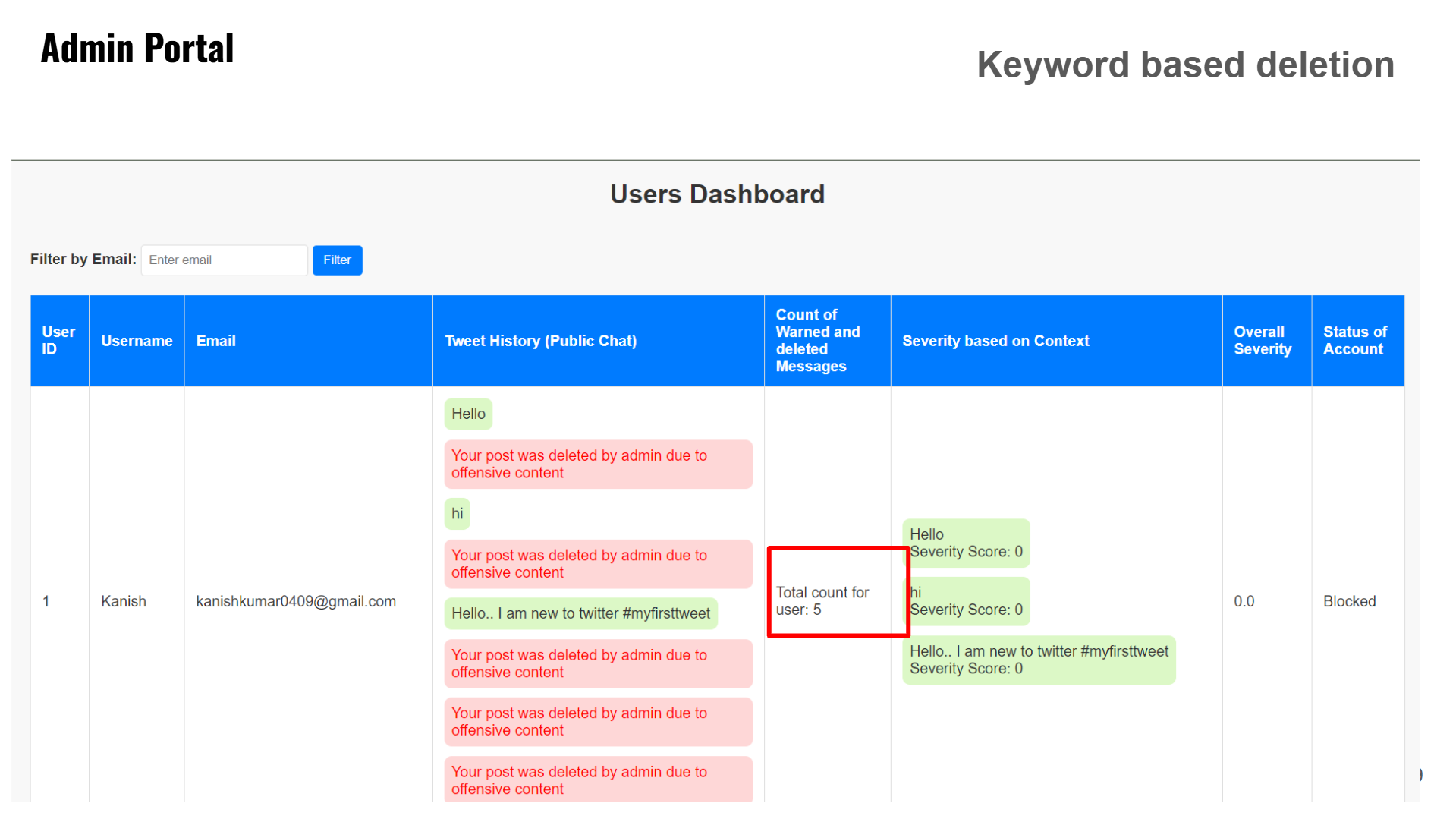

The proposed system offers a comprehensive solution for cyberbullying detection across text, images, videos, and audio. It uses machine learning models like Random Forest, BERT, and VGG16 for text and multimedia analysis, detecting harmful content and assessing severity. For text, offensive keywords are flagged, and context is analyzed for severity scoring. For images and videos, convolutional and recurrent neural networks (CNN-RNN hybrid) are used to identify cyberbullying. Audio is transcribed and processed with a Random Forest model. A prevention mechanism warns users, auto-deletes offensive posts, and temporarily suspends accounts based on severity. The system also incorporates an admin panel for monitoring user activity, ensuring effective moderation with metrics like F1 score and accuracy to evaluate performance.